Ever wondered how computers "see" and make sense of images? While humans can easily recognize shapes, patterns, and boundaries within a picture, teaching a computer to do the same isn’t so straightforward.

This is where edge detection comes in—a key technique in image processing that enables machines to identify the lines and contours in an image. It gives the computer a pair of eyes to focus on the important details.

What is Edge Detection?

In most basic terms, edge detection is about finding the outlines or boundaries of objects within an image. When you look at a photograph, the edges help define what’s in the picture—whether it's the edge of a tree, a building, or someone's face.

In computer vision, edge detection refers to the process of identifying these significant changes in brightness, color, or texture, which indicate the presence of an edge.

Imagine you’re looking at a black-and-white image. The bright areas and dark areas create contrasts, and these contrasts are what form edges.

Edge detection algorithms scan an image pixel by pixel, searching for these contrasts. When the algorithm detects a big enough change in intensity between neighboring pixels, it marks that as an edge.

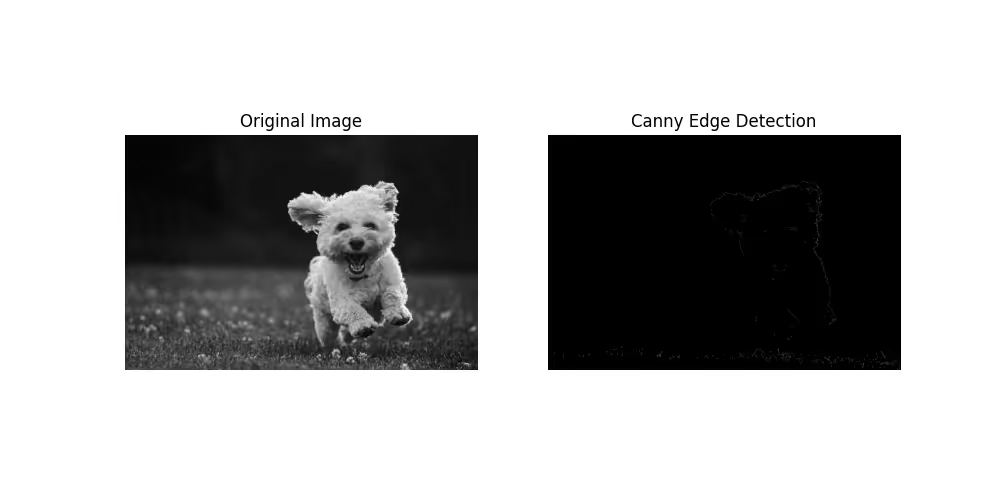

Example

Here’s a simple edge detection Python example using OpenCV:

How Edge Detection Works

Edge detection in image processing is based on examining the contrast between adjacent pixels, and can be run directly on edge computing devices.

When you look at an image, some areas are bright, and others are dark. This difference in brightness creates edges. Edge detection algorithms work by scanning an image and detecting these contrasts.

An edge detection filter is often applied to highlight these areas, making it easier to identify edges automatically. This filter enhances the visibility of the boundaries, allowing the computer to process the image more effectively.

Techniques in Edge Detection

When it comes to the maths and science behind edge detection, several key techniques are employed to pinpoint where edges are located in an image.

{{cool-component}}

1. Gradient-Based Edge Detection

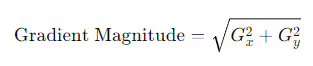

The most common technique for detecting edges is based on calculating the gradient of pixel intensities. The gradient measures the change in intensity at a point in the image, and edges correspond to points where this change is the greatest.

Mathematically, the gradient of an image is a vector that points in the direction of the most significant intensity change. It is calculated using partial derivatives, typically represented as:

- G_x: The change in intensity along the x-axis (horizontal).

- G_y: The change in intensity along the y-axis (vertical).

The magnitude of the gradient, which represents the strength of the edge, can be computed as:

This magnitude gives us the rate of change in intensity, while the direction of the gradient helps determine the orientation of the edge.

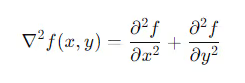

2. Laplacian-Based Edge Detection

Another key method is the Laplacian technique, which focuses on finding edges by calculating the second derivative of the image.

Unlike the gradient, which looks at the rate of change, the Laplacian examines points of rapid change in intensity. This is particularly useful for identifying both fine details and sharper edges.

The Laplacian is represented as:

In practice, the Laplacian is often combined with Gaussian smoothing to reduce noise before edge detection, a technique known as the Laplacian of Gaussian (LoG).

3. Thresholding

After computing the gradient or Laplacian, the next step is often to apply a thresholding technique. This means defining a specific value, and only points where the gradient magnitude (or Laplacian) exceeds that value are considered edges.

Thresholding helps eliminate small fluctuations that might not correspond to real edges and focuses only on the most prominent boundaries.

4. Non-Maximum Suppression

In many edge detection algorithms, particularly in Canny edge detection, a technique called non-maximum suppression is used. After calculating the gradient magnitude and direction, this method ensures that only the strongest points along the edge are kept.

It works by comparing each pixel's gradient magnitude to its neighbors in the direction of the edge. If the pixel isn’t a local maximum, it’s suppressed, or removed, to ensure sharp, clean edges.

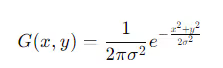

5. Smoothing (Gaussian Blur)

Before applying gradient-based techniques, it's common to reduce noise in the image using smoothing filters like the Gaussian blur.

This reduces small variations in pixel values that could be mistaken for edges. The Gaussian filter smooths the image by averaging the intensity of neighboring pixels, reducing noise but preserving significant edges.

Mathematically, Gaussian smoothing is performed by convolving the image with a Gaussian function, which has the form:

Where σ determines the level of smoothing.

Importance of Edge Detection in Image Processing

Edge detection is crucial because it simplifies the image data while preserving the essential structural elements. By reducing unnecessary details, it becomes easier to analyze, interpret, and recognize patterns in an image.

Whether it’s for object recognition, image segmentation, or computer graphics, edge detection serves as a foundational tool in image processing tasks.

For instance, in medical imaging, edge detection helps highlight critical areas, such as tumor boundaries. In automotive technology, edge detection assists in recognizing lanes and obstacles for self-driving cars.

Common Edge Detection Algorithms

There are several edge detection algorithms commonly used in image processing:

1. Sobel Operator

The Sobel operator is a widely used method for edge detection that calculates the gradient of an image's intensity. It uses two convolution masks to detect edges along horizontal and vertical orientations.

2. Canny Edge Detection

Canny edge detection is known for its multi-step process that reduces noise and precisely detects edges. It’s like a detective carefully piecing together clues (edges) after filtering out distractions (noise).

Canny uses Gaussian smoothing, gradient calculation, non-maximum suppression, and thresholding to ensure only the strongest edges are identified.

3. Prewitt Operator

The Prewitt operator is similar to Sobel but uses constant coefficients for its masks, making it simpler and faster. It's like a quick sketch—good for rough outlines but not detailed enough for precise work.

Prewitt is often used where speed is more important than accuracy.

4. Roberts Cross Operator

The Roberts Cross operator is designed to detect diagonal edges using 2x2 convolution kernels, making it computationally efficient but more prone to noise.

Imagine trying to identify diagonal lines in a picture with a magnifying glass. It’s quick, but because you’re focusing on one small section at a time, you might miss some of the bigger picture.

Applications of Edge Detection

The applications of edge detection are vast and can be found in many areas, from everyday technology to highly specialized fields:

- Computer Vision: Edge detection is fundamental in tasks like facial recognition, motion detection, and 3D reconstruction. It helps machines interpret and understand visual data in a way similar to how humans do.

- Medical Imaging: Doctors use edge detection to highlight structures in X-rays, MRIs, and CT scans, helping them diagnose conditions more accurately.

- Self-Driving Cars: Vehicles equipped with cameras use edge detection to identify lanes, obstacles, and traffic signs, ensuring safe navigation.

- Image Editing Software: Applications like Photoshop and other online tools utilize edge detection to allow users to make precise selections and enhancements.

In fact, with advancements in technology, you can even find edge detection online tools, where you can upload your images and see how different algorithms process them.

{{cool-component}}

Evaluating Edge Detection Performance

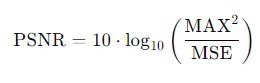

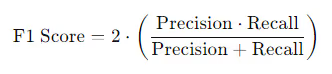

Two common metrics used to measure performance are the Peak Signal-to-Noise Ratio (PSNR) and the F1 Score, each suited for different applications.

1. Peak Signal-to-Noise Ratio (PSNR)

PSNR is used to assess how well an algorithm preserves edges while minimizing noise. This metric is crucial in areas like satellite imaging, where maintaining fine details is important.

PSNR measures the ratio between the maximum possible pixel value and the noise introduced during edge detection. A higher PSNR means clearer, more accurate edge detection with less noise.

2. F1 Score

The F1 Score is key in applications like medical imaging, where both precision (correct edges) and recall (all true edges) are important. The F1 score balances these factors, making it ideal for detecting critical features like tumor boundaries.

A high F1 score reduces the risk of false positives (detecting non-edges) and false negatives (missing actual edges).

Conclusion

Edge detection is a vital tool in image processing and computer vision. It simplifies image data by identifying the most critical parts of an image—its edges—allowing computers to process visual information more effectively. With numerous edge detection algorithms available, each suited for different tasks, it is a flexible technique applied in many industries, from medical imaging to autonomous vehicles.

Set a meeting and get a commercial proposal right after

Build your Multi-CDN infrastructure with IOR platform

Build your Multi-CDN infrastracture with IOR platform

Migrate seamleslly with IO River migration free tool.